The robot software development environment ArmarX (sources available at https://git.h2t.iar.kit.edu/sw/armarx/) provides a comprehensive and modular framework for the efficient creation and management of distributed robot software components. This modular framework streamlines robot software development through the use of standardized interfaces and versatile components that can be readily customized, extended, or parameterized to align with specific robot capabilities and application requirements. To enhance the development workflow, ArmarX offers an extensive toolkit including intuitive plug-in-based graphical interfaces, real-time inspection utilities, and comprehensive system monitoring tools.

Cognitive Robot Architecture

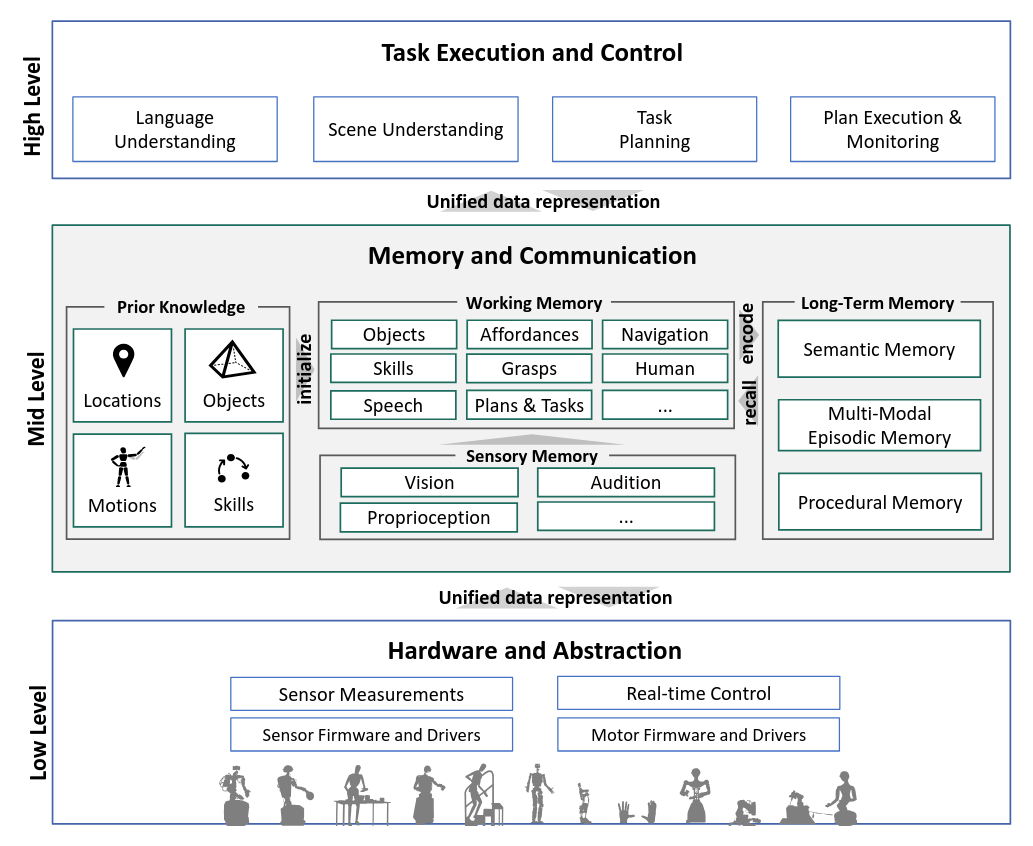

ArmarX has been developed to implement a cognitive robot architecture comprising three layers. At the core of this architecture lies the memory system, which mediates between high-level abilities, typically represented in a symbolic manner, and low-level abilities. High-level abilities include natural language understanding, scene understanding, planning, plan execution monitoring, and reasoning. These capabilities rely on the memory system to coordinate with low-level abilities, such as sensor data processing and sensorimotor control.

The low-level layer provides hardware drivers and hardware abstraction, enabling seamless interaction with the robot's physical components and is responsible for sensor data processing and real-time control. The high-level layer implements methods on semantic level such as natural language understanding, scene understanding, task planning and plan execution monitoring. The mid-level layer acts as mediator between the low-level and high-level layers by offering essential services such as inverse kinematics, motion planning, and trajectory execution. It also incorporates a robot memory system, which consists of prior knowledge, working memory, and long-term memory, as well as perception capabilities like object recognition and self-localization. Together, these layers form a cohesive framework for cognitive robot control, facilitating the integration of symbolic planning and reasoning with sensorimotor execution (Peller et al. 2023).

Software Structure

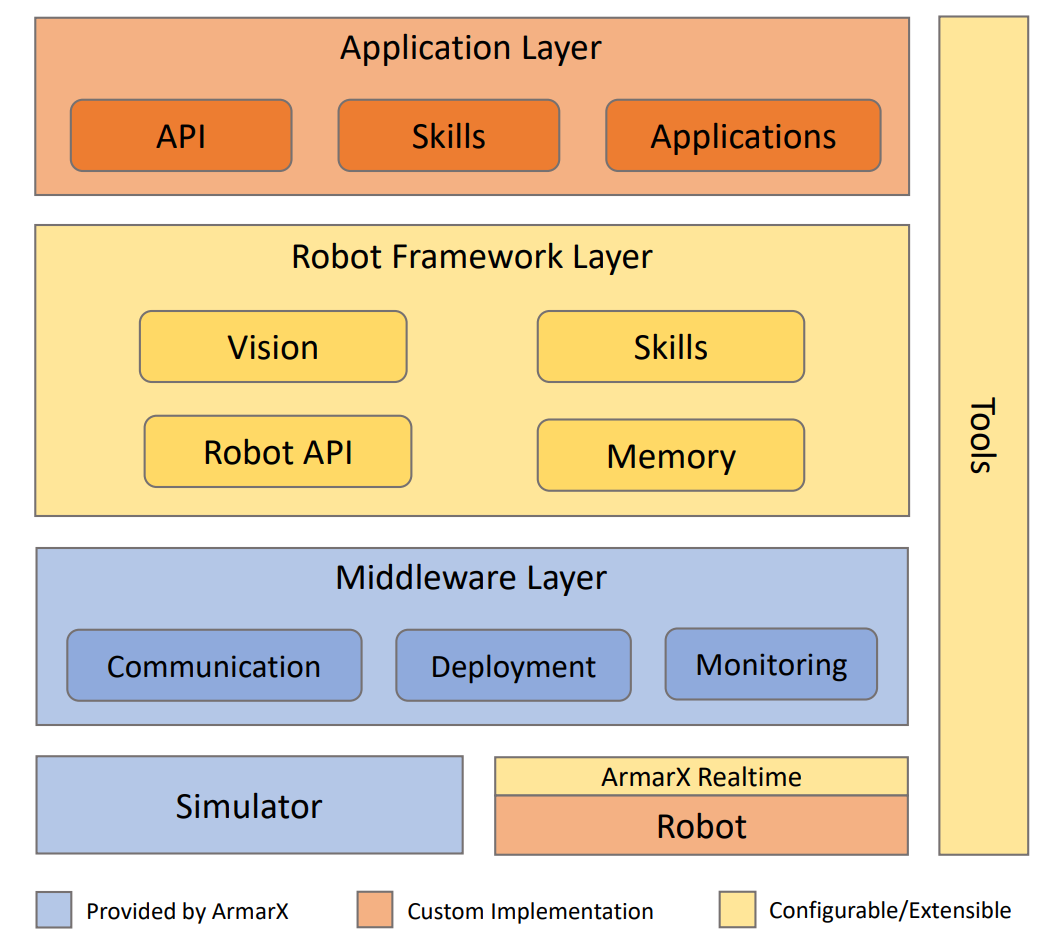

The middleware layer provides the essential infrastructure required to develop distributed applications. It abstracts communication mechanisms, offers key building blocks for distributed systems (e.g., ArmarXCore), and includes entry points for visualization, debugging, and system monitoring. This layer ensures seamless interaction between components, enabling efficient and scalable robot software development.

The robot framework layer encompasses various projects that provide high-level access to sensorimotor capabilities. These projects include modules for memory, robot and world modeling, perception, and execution. The middleware layer's core components are further specialized in the RobotAPI, which offers interfaces for the functionalities provided by the robot framework layer (e.g., see RobotAPI).

Developers can extend and specialize these interfaces to implement a robot-specific API tailored to their hardware and application needs. Such robot-specific APIs typically include implementations for accessing low-level sensorimotor functionalities and models specific to the robot. Depending on the application, this layer may also incorporate specialized memory structures, instances of perceptual functions, and control algorithms. Additionally, basic skills are provided through dedicated skill packages, such as those for control, manipulation, and navigation.

In the application layer, the final robot program is implemented as a distributed application, leveraging both generic and robot-specific APIs. This includes:

- Robot-Agnostic Skills: These skills range from low-level functionalities, such as basic joint position control, to higher-level capabilities like visual servoing and object manipulation. They are designed to be reusable across different robots.

- Robot-Specific Skills: These are tailored for specific robots, such as ARMAR-III and ARMAR-6, to address their unique hardware and application requirements.

This layered approach ensures modularity and flexibility, enabling developers to efficiently create and adapt robot programs for various tasks and platforms.

The ArmarX toolset offers a comprehensive suite of tools designed to simplify the development of robot applications. Central to this toolset is the ArmarX GUI, which provides an intuitive graphical interface, including a 3D visualization of the robot's environment. The GUI is highly extensible, allowing the integration of custom widgets for specific tasks, such as the PlatformUnit widget for manual control of the mobile base. Additionally, it includes inspection tools for monitoring the system's health and state, ensuring efficient debugging and system management.

ArmarX integrates both kinematic and physics-based simulators to facilitate the development and testing of robot applications. The simulation environment provides a comprehensive set of ready-to-use components and units. These components mimic the behavior of a real robot through the hardware abstraction layer (HAL). This abstraction ensures that all robot programs, GUI plugins, and other components interact with the simulated environment in the same way as they would with real hardware. Consequently, developers can seamlessly switch between simulation and real-world deployment without modifying the underlying code or interfaces.